Deepfakes and Generative Deep Learning Part.1 | Technological Innovation that Created Deepfakes Recommended Articles for You

*This article was contributed by NABLAS Co., Ltd.

Contents

1 Introduction

The image below looks like a photograph of a real person, but in reality, the photograph All the people in the picture do not exist. All of them are portraits **generated** using deep learning technology called GAN.

In 2014, the first GAN model was announced by a research group including Ian Goodfellow and Yoshua Bengio, who were doing research at the University of Montreal at the time [2].

Since then, as the computational power of GPUs for deep learning has improved significantly, researchers around the world have improved their models, and GAN technology has evolved at an exponential rate. Today, not only can it generate images that are too detailed and realistic to be discerned by humans, but it can also convert video in real time and generate images from text.

"GANs are the most interesting idea of the last decade," said Yann LeCun, one of the leading authorities on deep learning. On the other hand, the rapid evolution of technology has caused various social phenomena and problems.

Fig. 1 A picture of a fictitious person generated by GAN [1]

"Deepfake" is one of the technologies that are causing a particularly big social problem among AI technologies that have evolved rapidly in recent years.Report "AI-enabled future crime" by a research group at University College London (UCL), published in Crime Science, August 2020[ 3] concludes that deepfakes are one of the most serious and imminent threats among AI crimes.

In fact, since the term deepfake appeared in 2017, the number of fake videos in the internet space has increased sharply, and in September 2020, Facebook announced that "fakes manipulated (generated) by AI The social impact and concerns are growing, such as announcing an operating policy such as "eliminating videos."

The rapid evolution of deepfake technology has a lot to do with the development of technologies that can generate real-like data, such as **autoencoders** and **GAN**.

While these technologies are highly versatile and useful technologies and are expected to bring about changes in various industries, they make it appear as if the person actually made a statement that the person did not make. It is a technology that can be abused depending on how it is used, and there are already many such cases.

In this white paper, we will look back on the evolution of deepfake and its underlying technology, and consider future social changes and technological trends.

2 Deep Fake Overview

"President Trump is a hopeless idiot." In early 2018, a video of former President Barack Obama (

However, the video is a fake video created by BuzzFeed and actor/director Jordan Peele, intended to warn about the problems rapidly evolving technology poses to society. there were. In this way, videos synthesized for the purpose of making it appear as if the person is performing actions that they are not actually doing or as if they are speaking are called "fake videos" and are a major social problem. is causing

"Deepfake" is a general term for technology that creates such fake videos with high accuracy using deep learning technology.

Fig.2 Fake video of President Obama created by deepfake technology[4]

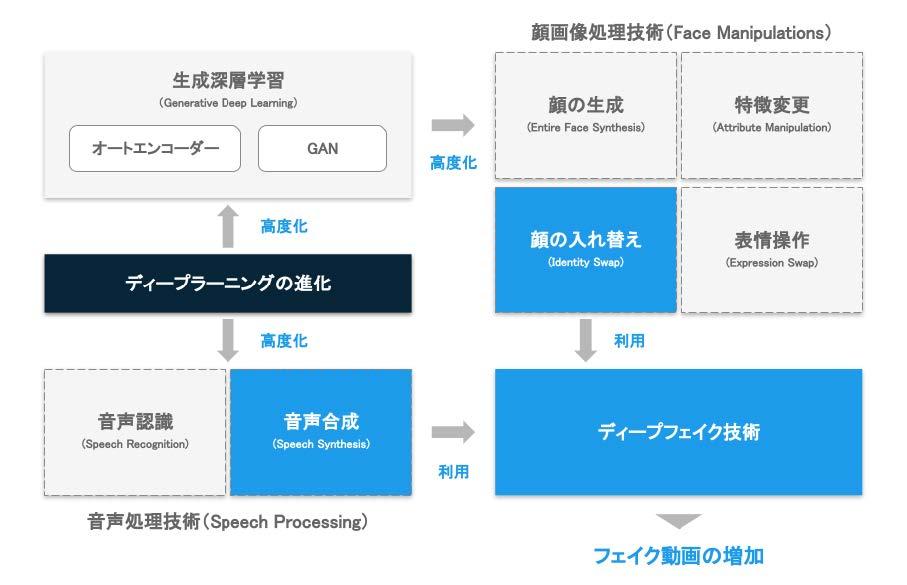

Deepfake is a synthesis technology that consists of several elements, but it can be broadly divided into two technical elements: "face image processing" and "voice processing"[5]. Both of these two technologies have greatly evolved with the evolution of deep learning technology. These two techniques are described in detail below.Fig. 3 Relationship between generative deep learning and deepfake technology

2.1 Face image processing and classification

Face manipulation is closely related to the evolution of technologies for generating data such as deep learning and GAN, and promotes mutual evolution. There is a side that has been Compared to problem settings such as "general object recognition," which identifies a wide range of images such as cars and animals, facial image processing is a problem setting that greatly limits the target of data, and collects a large amount of data. It has the advantage of being easy to

In addition, it is relatively easy to collect data identified as the same person (data with the same attributes) by using web searches, etc., so both quality and quantity as data , it can be said that good materials were available for advancing the technology. With machine learning technologies such as deep learning, the quality of the data provided to the model is directly linked to the quality of the output results and results, so having high-quality data plays a major role in the evolution of the technology.

In the field of face image processing, as technology evolves, more researchers and engineers will prepare data, further promoting the evolution of technology, creating a virtuous cycle, and the technology will evolve rapidly in a short period of time. bottom. In addition, some of the technologies that were born there are also being used in fields other than face image processing as highly versatile technologies.

According to ``DeepFakes and Beyond: A Survey of Face Manipulation and Fake Detection'' [6], face image processing is divided into 1) face generation (Entire Face Synthesis), 2) face replacement, based on technology and application. (Identity Swap), 3) Attribute Manipulation, and 4) Expression Swap.

When the word "deepfake" is taken in a broad sense, it includes all of these technologies in the sense of creating non-existent data, but among them, deepfake technology is the most well-known and socially significant. It's the "Identity Swap" that's causing the problem. Techniques in each category are described below.

2.1.1 Entire Face Synthesis

The first category is "face generation" technology that can generate a fictional human face that does not exist. As an industrial application, it is expected to create characters in games and use fictitious models for advertisements. Such characters and photographs have a high degree of freedom in terms of copyrights and portrait rights, and it is expected that they will be able to be produced to suit the purpose while keeping production and photography costs down.

On the other hand, there is also the danger of being used to create fake profiles on SNS.

Fig. 4 Generated facial image (“This person does not exist” [7])

2.1.2 Identity Swap

The second category is

Approaches can be broadly divided into two types: 1) using classical computer graphics (CG) technology and 2) using deep learning technology. The classical approach requires advanced specialized knowledge and equipment such as CG, as well as a lot of labor, but the use of deep learning technology has made it possible to easily create natural images and videos. .

As an industrial application, it is expected to be used in the production site of movies and video works. However, as the technology evolves, it is being abused for purposes such as fabricating evidence videos, fraud, and generating pornographic videos, and has already resulted in arrests in several countries, including Japan.

Fig. 5 Image with faces swapped by Identity Swap (Celeb-DF dataset [8])

"Datasets", which are large collections of real videos (images) and fake videos, have greatly contributed to the evolution of face-swapping technology. Fake videos include those created by classical methods and those created using deepfake technology at the time. According to [6], fake video datasets can be classified into two typesOn the other hand, the quality of the second-generation datasets has improved significantly, and the scene variations have increased.

Various scenarios such as those shot indoors and outdoors are prepared, and videos with various variations such as the lighting of the people appearing in the video, the distance from the camera, and the pose. exists. In addition, as a result of verifying with AI that discriminates several fake videos, the result is that it is more difficult to detect fake videos in the second generation dataset than in the first generation dataset.

Fig. 6 Comparison of the 1st and 2nd generation of fake video database (1st generation photos are FaceForensics++[9], 2nd generation photos are Celeb-DF dataset[8])

2.1.3 Attribute Manipulation

The third category is technology that modifies or modifies facial attributes such as hairstyle, gender, and age. As an industrial application, it is expected that it will be possible to imagine the completed image before surgery by using it in simulations in cosmetic and orthopedic surgery. In addition, since it is possible to freely change age and attributes in movies and dramas, it is expected to revolutionize the production process that mainly focuses on special makeup.

2.1.4 Expression Swap

The fourth category is technology for manipulating and changing human facial expressions in videos and images. As for industrial applications, it is a technology that is industrially expected to be used for video production, editing, online meetings, and YouTube avatar operation. On the other hand, it has been suggested that it may be used for fraud and fraudulent opinion guidance, and this technology is also used in the famous fake video of Mr. Mark Zuckerberg[12][6].

Fig. 7 Image whose features have been corrected by Attribute Manipulation (The original human image was created using the FFHQ dataset [10] and FaceApp [11] to change the features)

Fig.8 Face image with facial expression manipulated by Expression Swap (FaceForensics++[9])

2.2 Audio Processing and Classification

As mentioned above, deepfake is a technology that combines video synthesis and audio synthesis. Here, the latter speech synthesis technology will be described.

Similar to video synthesis technology, voice synthesis technology is also a technological area that has rapidly evolved over the past few years along with the evolution of deep learning technology[13]. In general, speech processing consists of two fields: speech recognition (speech-to-text), which converts speech into text, and speech synthesis (text-to-speech), which creates speech data from text. [13][14], and research has been conducted in both fields for a long time.

In addition to these two fields, the technical field of voice cloning, which replicates the voice of a specific person, has developed rapidly in the last few years, and is often used as a deepfake technology. Techniques in each category are described below.

2.2.1 Speech Recognition

Speech recognition is a general term for technologies that allow computers to recognize the content of speech data, and mainly refers to technology that converts human speech into text. Speech recognition is used in various situations such as smart home appliances, smartphones, and computer input.

Conventional speech recognition technology is realized by combining many components such as 1) acoustic analysis, 2) various processing such as decoder, 3) acoustic model, 4) data (dictionary) such as language model. was common. Since each component was often researched and developed separately, in order to improve the overall performance of speech recognition, in addition to improving the performance of each process, these technologies were appropriately integrated (connected). I needed the technology to do it.

As a result, previous processing often affects the performance of subsequent processing, and achieving high overall performance is generally known to be a technically difficult problem.

On the other hand, methods based on deep learning, which have grown rapidly in recent years, integrate multiple or many of the above processes. As a result, the pipeline as a whole has been simplified and its flexibility has increased. At the same time, the increase in the amount of data and the development of computer technology have greatly improved the accuracy of speech recognition.

“Alexa Conversations” [15] is one example of a product that uses a deep learning-based approach.

Fig. 9 Classification of speech processing technology

2.2.2 Text-to-speech

In contrast to speech recognition, the technology that outputs synthesized speech is called speech synthesis. . Among them, a technique for synthesizing speech from text as input is called text-to-speech (TTS).

Unnatural intonation and connections between words were conspicuous in conventional technology, but in recent years, as methods based on deep learning have become common, technology in this field has dramatically improved performance. improving.

In addition to being able to express the intonation of long sentences, it is now possible to synthesize natural voices, and it is also possible to switch between multiple speakers and control tones and emotions. . Subsequent improved versions have been put to practical use in various places, such as the Google Assistant and Google Cloud's multilingual speech synthesis service.

An example of an industrial application of text-to-speech technology is text-to-speech. There are a wide variety of applications, such as voice guidance for users while driving, virtual assistants, and interfaces for visually impaired people, and many services and products have already been realized using the above technologies.

2.2.3 Voice Cloning

As an advanced form of speech synthesis technology, in addition to text, the voice of a specific speaker is also received as input, and A technology was born that can synthesize and output voices that sound as if they were there. Such technology is called voice cloning and is often used in deepfakes.

Conventionally, it was a difficult technology to handle, such as requiring advanced technology and collecting a large amount of voice data of the cloned speaker. cloning is possible.

Voice cloning technology already has some industrial applications, such as creating videos that convey important messages with the voice of the deceased (although its pros and cons are debatable), from blogs to websites. It is already being used for applications such as automatic radio creation and distribution.

In addition, it is expected to restore the voices of people who have lost their voices, such as ALS patients, to support communication, and to create dubbed videos. Furthermore, it is also used for editing purposes such as partially correcting long audio data recorded for radio or distribution, or audio data that is difficult to re-record.

On the other hand, there have already been cases where it has been misused for purposes such as fraud.

References

[1] T. A. Tero Karras Samuli Laine, “A style-based generator architecture for generative adversarialnetworks,” arxiv arXiv:1812.04948, 2018.[2] M. M. Ian J. Goodfellow Jean Pouget-Abadie, “Generative adversarial networks,” arxiv arXiv:1406.2661,2014.[3] M. Caldwell, J. Andrews, T. Tanay, and L. Griffin, “AI-enabled future crime,” Crime Science, vol. 9, no. 1, pp. 1–13, 2020.[4] “You Won’t Believe What Obama Says In This Video!” BuzzFeedVideo; https://www.youtube.com/watch?v=cQ54GDm1eL0.[5] “What are deepfakes – and how can you spot them?” https://www.theguardian.com/technology/2020/jan/13/what-are-deepfakes-and-how-can-you-spot-them, Jan. 2020 .[6] R. Tolosana, R. Vera-Rodriguez, J. Fierrez, A. Morales, and J. Ortega-Garcia, “Deepfakes and beyond: A survey of face manipulation and fake detection,” Information Fusion, 2020.[ 7] “This Person Does Not Exist.” https://thispersondoesnotexist.com/, 2019.[8] Y. Li, P. Sun, H. Qi, and S. Lyu, “Celeb-DF: A Large-scale Challenging Dataset for DeepFakeForensics,” 2020.[9] A. Rössler, D. Cozzolino, L. Verdoliva, C. Riess, J. Thies, and M. Nießner, “FaceForensics++: Learning to detect manipulated facial images,” 2019.[10 ] “Flickr-Faces-HQ Dataset (FFHQ).” Flickr; https://github.com/NVlabs/ffhq-dataset, 2019.[11] “FaceApp – AI Face Editor.” https://apps.apple. com/gb/app/faceapp-ai-face-editor/id1180884341.[12] “Facebook lets deepfake Zuckerberg video stay on Instagram.” BBC News; https://www.bbc.com/news/technology-48607673, 2019 .[13] D. Y. Geoffrey Hinton Li Deng and B. Kingsbury, “Deep neural networks for acoustic modeling inspeech recognition: The shared views of four research groups,” IEEE Signal Processing Magazine, vol. 29,2012.[14] H. Z. Aaron van den Oord Sander Dieleman, “WaveNet: A generative model for raw audio,” arXivarXiv:1609.03499, 2016.[15] About alexa conversations. Amazon.Author info

| Representative Director of AI Research Institute NABLAS Co., Ltd. |

Belongs to the Faculty of Buddhism, Komazawa University. I'm addicted to YouTube and K-POP. I am interested in how AI will relate to religion in the future and how it will affect the lives of Buddhists.

![[EV's simple question ③] What is good for KWH, which represents the performance of the battery?What is the difference from AH?-WEB motor magazine](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/9/b2506c4670f9f2cb45ffa076613c6b7d_0.jpeg)

![[How cool is the 10,000 yen range?] 1st: The performance of the "robot vacuum cleaner with water wiping function (19800 yen)" like Rumba is ...](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/25/5251bb14105c2bfd254c68a1386b7047_0.jpeg)