Emerging AI version of the "vehicle inspection system" How to create an AI that does not discriminate? "Quality assurance" has become an industry overseas

Social Principles of Human-Centric AI (excerpt from Cabinet Office materials)

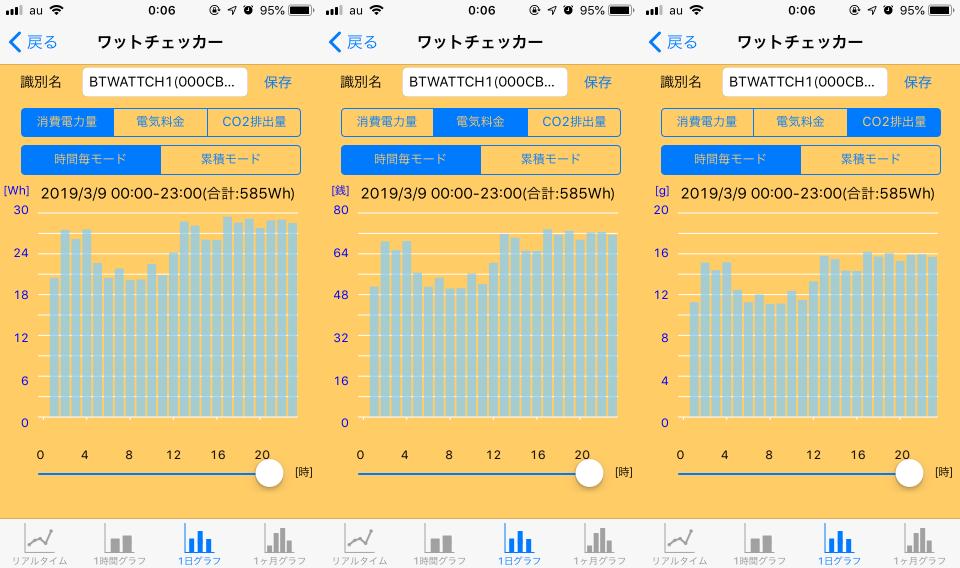

According to a forecast released by IDC Japan in June 2021, the domestic AI market is expected to continue growing rapidly even after the COVID-19 pandemic, reaching approximately 500 billion yen by 2025. IDC analyzes that the reason for this is that "COVID-19 has reaffirmed the importance of corporate transformation, and as a result, investment in AI has accelerated." [Image] Overview of the AI Governance Guidelines (Excerpt from the materials released by the Ministry of Economy, Trade and Industry) The introduction of AI brings us various benefits. However, on the other hand, as reported in various places, problems have arisen due to the inability to maintain the quality of AI. Problems include business losses due to AI's misjudgment, and the occurrence of discrimination against specific genders, races, and social strata. Many of them are not intentional, but are the cumulative result of small mistakes, negligence, and poor risk management. As new technologies spread, efforts are required to prevent such mistakes and maintain quality. For example, in the case of automobiles, there is a vehicle inspection system (automobile inspection registration system). The inspection system is compulsory. While benefiting from the new technology of automobiles, rules were put in place to maintain quality in order to avoid its negative aspects. Similarly, in the case of AI, it can be predicted that a system for inspecting and guaranteeing quality will be developed in response to its rapid spread.

How to guarantee the quality of AI

Various organizations and institutions are already considering rules and guidelines to guarantee the quality of AI, and it goes beyond the level of principles and principles. Many of them provide detailed information. There are also examples of governments taking the lead in presenting such rules. Looking at the case of Japan, in May 2018, the Cabinet Office established the ``Review Council for Human-Centric AI Social Principles,'' and in March 2019 announced the ``Human-Centric AI Social Principles.'' This is positioned as "basic principles that society should keep in mind in order for society to accept and use AI appropriately," and although the content is literally limited to principles and principles, many companies do not have specific rules regarding AI. It is the starting point for discussions on the development of Advice on specific actions to realize these principles is also provided. On July 9, 2021, the Ministry of Economy, Trade and Industry announced the "Governance Guidelines for Practicing AI Principles" (Version 1.1 was released on January 28, 2022). As the title suggests, this was created as a guideline for concretely implementing human-centered AI social principles, and has no legal binding force. It is intended to be used as a reference. The guidelines consist of action goals that AI businesses should implement (21 items including detailed items), practical examples corresponding to each action goal, and examples of evaluation of deviations from AI principles. and explains specific actions. Since it is not legally binding, it is up to the business operator to decide what action to take, but for companies that are building a governance system to ensure the quality of AI. , It is the content that will be the basis for examination. On the other hand, although limited, there are also cases that require mandatory AI quality assurance in various forms. What is interesting is the ordinance "Int 1894-2020" that was passed in New York City at the end of 2021. This bill requires employers, etc., to use AI-based employment decision tools (applications that automatically make decisions such as whether to hire a target applicant or whether to promote a specific employee). In contrast, the content calls for a “bias audit.” According to the definition of the bill, a bias audit is a fair evaluation by an independent auditor to determine whether AI makes discriminatory decisions based on factors such as race and gender. Employers using AI employment decision tools must conduct such bias audits once a year and publicly disclose the results. The bill mandates advance notice of use to candidates and employees who are subject to the tool, and preparation of evaluation methods other than AI tools (that is, conventional human judgment) if they request it. was Although this bill passed Congress, unfortunately it did not come into effect on December 13, 2021, as it was not signed by then-New York City Mayor Bill de Blasio. Even if it had come into effect, it would have been difficult to realize ``fair bias evaluation by an independent auditor'', and if it could have been realized, it would have hindered the introduction of AI tools if it would have cost a lot. There is also criticism that However, the fact that such a rule was once passed by the legislative branch indicates the possibility that similar regulations will spread in the future.

![What is "thousand eyes" at night? [Techniques for listening to jazz - the road to becoming a jazz "professional listener" 126]](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/28/9b839a325eb3ad18a729c92cc52aa70b_0.jpeg)

![[EV's simple question ③] What is good for KWH, which represents the performance of the battery?What is the difference from AH?-WEB motor magazine](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/9/b2506c4670f9f2cb45ffa076613c6b7d_0.jpeg)

![[How cool is the 10,000 yen range?] 1st: The performance of the "robot vacuum cleaner with water wiping function (19800 yen)" like Rumba is ...](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/25/5251bb14105c2bfd254c68a1386b7047_0.jpeg)